It is often useful to be able to synchronize the contents of certain folders. Yesterday I needed a way to make sure that files in separate folders on some of my servers are kept up to date. I wrote the script in this post to help me accomplish that. Incidentally it also proved useful in syncing some private files between dropbox and google drive etc.. 🙂

How does the script work?

The basic idea of the script is to sync files from a source dir to a destination dir. Also, since I work with many large files I only want to copy them if there actually is a difference between the file versions in the source and destination dir. To do all this I came up with these logical steps.

1. Loop through all the files in the source dir.

2. If the file doesn’t exist in the destination dir then copy it.

3. If the file exists in the destination dir then calculate the MD5 hashes of both source and destination files and compare. If the hashes match then the files are identical and can be skipped. If not then copy the file.

The script itself

The comments I put in the code should explain how I built the script according to the logical steps above.

###############################################################################

##script: Sync-Folders.ps1

##

##Description: Syncs/copies contents of one dir to another. Uses MD5

#+ checksums to verify the version of the files and if they

#+ need to be synced.

##Created by: Noam Wajnman

##Creation Date: June 9, 2014

###############################################################################

#FUNCTIONS

function Get-FileMD5 {

Param([string]$file)

$md5 = [System.Security.Cryptography.HashAlgorithm]::Create("MD5")

$IO = New-Object System.IO.FileStream($file, [System.IO.FileMode]::Open)

$StringBuilder = New-Object System.Text.StringBuilder

$md5.ComputeHash($IO) | % { [void] $StringBuilder.Append($_.ToString("x2")) }

$hash = $StringBuilder.ToString()

$IO.Dispose()

return $hash

}

#VARIABLES

$DebugPreference = "continue"

#parameters

$SRC_DIR = 'c:\sourcefolder\'

$DST_DIR = 'C:\destfolder\'

#SCRIPT MAIN

clear

$SourceFiles = GCI -Recurse $SRC_DIR | ? { $_.PSIsContainer -eq $false} #get the files in the source dir.

$SourceFiles | % { # loop through the source dir files

$src = $_.FullName #current source dir file

Write-Debug $src

$dest = $src -replace $SRC_DIR.Replace('\','\\'),$DST_DIR #current destination dir file

if (test-path $dest) { #if file exists in destination folder check MD5 hash

$srcMD5 = Get-FileMD5 -file $src

Write-Debug "Source file hash: $srcMD5"

$destMD5 = Get-FileMD5 -file $dest

Write-Debug "Destination file hash: $destMD5"

if ($srcMD5 -eq $destMD5) { #if the MD5 hashes match then the files are the same

Write-Debug "File hashes match. File already exists in destination folder and will be skipped."

$cpy = $false

}

else { #if the MD5 hashes are different then copy the file and overwrite the older version in the destination dir

$cpy = $true

Write-Debug "File hashes don't match. File will be copied to destination folder."

}

}

else { #if the file doesn't in the destination dir it will be copied.

Write-Debug "File doesn't exist in destination folder and will be copied."

$cpy = $true

}

Write-Debug "Copy is $cpy"

if ($cpy -eq $true) { #copy the file if file version is newer or if it doesn't exist in the destination dir.

Write-Debug "Copying $src to $dest"

if (!(test-path $dest)) {

New-Item -ItemType "File" -Path $dest -Force

}

Copy-Item -Path $src -Destination $dest -Force

}

}

How do I use/run the script?

First of all remember to enter the paths for your source and destination dirs/folders in the #parameters section of the script.

You can run the script manually and synchronize on a need to basis but I recommend using the windows task scheduler to run it regularly and keep your dirs synchronized at all times with minimal effort.

In order to configure a scheduled task which runs the script you can follow the below steps (for windows 2008 R2/windows 7).

1. Start the windows task scheduler.

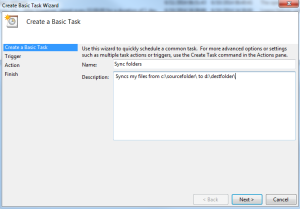

2. Stand on “task scheduler library” and click “Create Basic Task”.

3. Give your task a name and a description.

4. In the next steps choose a schedule for the task and Click “Next” until you get to “action”.

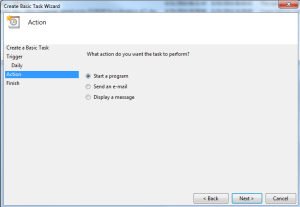

5. Choose “Start a program” and click “Next”.

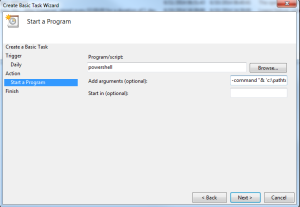

6. Under “Program/script:” simply write powershell. In the “Add arguments:” field write -command “& ‘c:\pathtoscript\sync-folders.ps1′”(change c:\pathtoscript\ to where your sync-folders.ps1 file is located). Click “Next”.

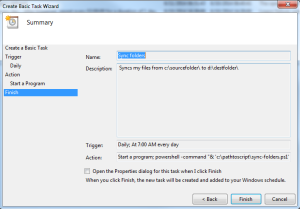

7. You should now see a summary of the task looking sort of like this.

8. Click “Finish”.

That’s it! You’re done.

I hope you find the script useful. Good luck!

What if you want to sync it to multiple destinations and the destinations are authenticated via AD? Anyway I can force it to log-in to AD or atleast prompt me to log-in?

You would probably need add some lines to the script where you map the destination drives with whatever credentials you need. Syncing to multiple placesis not a problem you just need to make the $DST_DIR into an array with all the destinations you need and then loop through them while executing all the code in the #SCRIPT MAIN section for each one.

All this does require some significant changes to the script though.

It doesn’t work with network paths starting like “\\” or “http://”

Any solutions ?

You could try to map the drive so you don’t have to use UNC path notation.

For an unknown reason the command get-childitem cannot get the network drives either

That inihgst’s perfect for what I need. Thanks!

check your permissions. When I try using get-childitem with UNC paths it’s working.

Try using “” not ” around the UNC path

This is a neat surymam. Thanks for sharing!

Thanks Noam. What about files deleted from the source? Can you suggest some added lines to remove files from the destination that were removed from the source?

At the end of the script you would need to loop through the destination files and see if they exist at the source (and delete at destination if they don’t). sort of like adding this to the end:

$SourceFiles = GCI -Recurse $DST_DIR | % {

if (!(Test-Path ($SRC_DIR + $_.Name)) {

remove-item $_

}

}

Please be aware that I haven’t tested this and you might need to tweak i a little to get it to work as expected.

Hope it helps.

Thanks for the script Noam, very helpfull!

What is the variable $DebugPreference for ?

Do you think Powershell allow to write “Write-Debug” to a file then send to an email at the end of the script ?

Regards,

Andy

Hi Andy,

Glad it helped you. $debugpreference controls the display level of debug messages and if the script should stop executing.

I don’t know if the debug messages are stored in a builtin variable but I don’t think so. I suggest you just declare a string value yourself and append the debug messages to it. Then you can simply send this in an email at the end of the script execution.

\Noam

Hello Noam,

Newly created directory is not going to copy to destination location, so how can to handle this.

Regards,

Deep

Hi Deep,

New dirs and files should be copied/synchronized. Just remember that they need to be in the $SRC_DIR directory.

\Noam

Is there a way to specifically sync only .pdf file types, and ignore all other file extensions?

in line 28 you can add somethinf so it looks like this.

$SourceFiles = GCI -Recurse $SRC_DIR | ? { $_.PSIsContainer -eq $false -and $_.extension -eq ‘.pdf’}

that should do the trick

I like to party, not look arcetlis up online. You made it happen.

I’m seeing the same as Deep, new directories in the source are not being created in the destination.

Receiving this error: Copy-Item : Container cannot be copied onto existing leaf item.

What if you specify an empty dir as the destination?

FreeFileSync is an Open-Source folder comparison and synchronization tool that is optimized for highest performance and usability, without an overloaded user interface.

The tool allows comparing files either by content, size or date.

A user has to simply drag and drop folders he wants to compare or synchronize.

The script runs great for me. I had to add some credentials under the parameters and a net use command in the main area, but my issue is the length of the file path in some spots is over 260. Is there a way to truncate or error log those?

I think you might always have problems with paths that long. However you can pretty easily set up logging.

I would probably create a small function which creates a log file with timestamp which takes a message as a parameter. Then I could use this to append to a log file if a copy fails.

Hi,

Was wondering what licensing you have for your script? I’m making some modifications to it for my purposes but wondered about possibly adding it to an open-source repo for others to use and improve upon?

Cheers,

Chris

Sure. Go ahead.

Okay, I’ll post-back when I have a repo up and working. Thanks for the script, by the way!

The script runs fine when I manually run it in powershell. When I create a scheduled task I get an error that says ” The string is missing the terminator: “.

+CategoryInfo :ParserError: (:) []. Parent ContainsErrorREcordExcepton

seems script don’t see changes in a file… so if u open file in source, modify text and run PS, then those changes not sync